When Using A Significance Test Calculator, What Is The “margin Of Error” Called?

Statistic expressing the amount of random sampling error in a survey's results

Probability densities of polls of different sizes, each color-coded to its 95% confidence interval (below), margin of error (left), and sample size (right). Each interval reflects the range within which one may have 95% confidence that the true percentage may be found, given a reported percentage of 50%. The margin of error is half the confidence interval (also, the radius of the interval). The larger the sample, the smaller the margin of error. Also, the further from 50% the reported percentage, the smaller the margin of error.

The margin of error is a statistic expressing the amount of random sampling error in the results of a survey. The larger the margin of error, the less confidence one should have that a poll result would reflect the result of a survey of the entire population. The margin of error will be positive whenever a population is incompletely sampled and the outcome measure has positive variance, which is to say, the measure varies.

The term margin of error is often used in non-survey contexts to indicate observational error in reporting measured quantities. It is also used in colloquial speech to refer to the amount of space or amount of flexibility one might have in accomplishing a goal. For example, it is often used in sports by commentators when describing how much precision is required to achieve a goal, points, or outcome. A bowling pin used in the United States is 4.75 inches wide, and the ball is 8.5 inches wide, therefore one could say a bowler has a 21.75 inch margin of error when trying to hit a specific pin to earn a spare (e.g.,1 pin remaining on the lane).

Concept [edit]

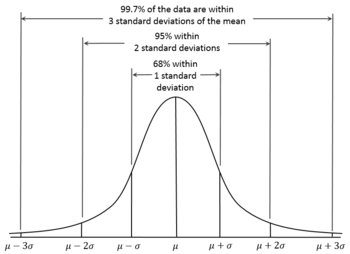

Consider a simple yes/no poll as a sample of respondents drawn from a population reporting the percentage of yes responses. We would like to know how close is to the true result of a survey of the entire population , without having to conduct one. If, hypothetically, we were to conduct poll over subsequent samples of respondents (newly drawn from ), we would expect those subsequent results to be normally distributed about . The margin of error describes the distance within which a specified percentage of these results is expected to vary from .

According to the 68-95-99.7 rule, we would expect that 95% of the results to fall within about two standard deviations ( ) either side of the true mean . This interval is called the confidence interval, and the radius (half the interval) is called the margin of error, corresponding to a 95% confidence level.

Generally, at a confidence level , a sample sized of a population having expected standard deviation has a margin of error

where denotes the quantile (also, commonly, a z-score), and is the standard error.

Standard deviation and standard error [edit]

We would expect the normally distributed values to have a standard deviation which somehow varies with . The smaller , the wider the margin. This is called the standard error .

For the single result from our survey, we assume that , and that all subsequent results together would have a variance .

Note that corresponds to the variance of a Bernoulli distribution.

Maximum margin of error at different confidence levels [edit]

For a confidence level , there is a corresponding confidence interval about the mean , that is, the interval within which values of should fall with probability . Precise values of are given by the quantile function of the normal distribution (which the 68-95-99.7 rule approximates).

Note that is undefined for , that is, is undefined, as is .

| 0.68 | 0.994457 883 210 | 0.999 | 3.290526 731 492 | |

| 0.90 | 1.644853 626 951 | 0.9999 | 3.890591 886 413 | |

| 0.95 | 1.959963984540 | 0.99999 | 4.417173 413 469 | |

| 0.98 | 2.326347 874 041 | 0.999999 | 4.891638 475 699 | |

| 0.99 | 2.575829 303 549 | 0.9999999 | 5.326723 886 384 | |

| 0.995 | 2.807033 768 344 | 0.99999999 | 5.730728 868 236 | |

| 0.997 | 2.967737 925 342 | 0.999999999 | 6.109410 204 869 |

Since at , we can arbitrarily set , calculate , , and to obtain the maximum margin of error for at a given confidence level and sample size , even before having actual results. With

Also, usefully, for any reported

Specific margins of error [edit]

If a poll has multiple percentage results (for example, a poll measuring a single multiple-choice preference), the result closest to 50% will have the highest margin of error. Typically, it is this number that is reported as the margin of error for the entire poll. Imagine poll reports as

- (as in the figure above)

As a given percentage approaches the extremes of 0% or 100%, its margin of error approaches ±0%.

Comparing percentages [edit]

Imagine multiple-choice poll reports as . As described above, the margin of error reported for the poll would typically be , as is closest to 50%. The popular notion of statistical tie or statistical dead heat, however, concerns itself not with the accuracy of the individual results, but with that of the ranking of the results. Which is in first?

If, hypothetically, we were to conduct poll over subsequent samples of respondents (newly drawn from ), and report result , we could use the standard error of difference to understand how is expected to fall about . For this, we need to apply the sum of variances to obtain a new variance, ,

where is the covariance of and .

Thus (after simplifying),

Note that this assumes that is close to constant, that is, respondents choosing either A or B would almost never chose C (making and close to perfectly negatively correlated). With three or more choices in closer contention, choosing a correct formula for becomes more complicated.

Effect of finite population size [edit]

The formulae above for the margin of error assume that there is an infinitely large population and thus do not depend on the size of population , but only on the sample size . According to sampling theory, this assumption is reasonable when the sampling fraction is small. The margin of error for a particular sampling method is essentially the same regardless of whether the population of interest is the size of a school, city, state, or country, as long as the sampling fraction is small.

In cases where the sampling fraction is larger (in practice, greater than 5%), analysts might adjust the margin of error using a finite population correction to account for the added precision gained by sampling a much larger percentage of the population. FPC can be calculated using the formula[1]

...and so if poll were conducted over 24% of, say, an electorate of 300,000 voters

Intuitively, for appropriately large ,

In the former case, is so small as to require no correction. In the latter case, the poll effectively becomes a census and sampling error becomes moot.

See also [edit]

- Engineering tolerance

- Key relevance

- Measurement uncertainty

- Observational error

- Random error

Notes [edit]

- ^ Isserlis, L. (1918). "On the value of a mean as calculated from a sample". Journal of the Royal Statistical Society. Blackwell Publishing. 81 (1): 75–81. doi:10.2307/2340569. JSTOR 2340569. (Equation 1)

References [edit]

- Sudman, Seymour and Bradburn, Norman (1982). Asking Questions: A Practical Guide to Questionnaire Design. San Francisco: Jossey Bass. ISBN 0-87589-546-8

- Wonnacott, T.H. and R.J. Wonnacott (1990). Introductory Statistics (5th ed.). Wiley. ISBN0-471-61518-8.

External links [edit]

- "Errors, theory of", Encyclopedia of Mathematics, EMS Press, 2001 [1994]

- Weisstein, Eric W. "Margin of Error". MathWorld.

When Using A Significance Test Calculator, What Is The "margin Of Error" Called?

Source: https://en.wikipedia.org/wiki/Margin_of_error

Posted by: davingoetted84.blogspot.com

![{\displaystyle [\mu -z_{\gamma }\sigma ,\mu +z_{\gamma }\sigma ]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/a4568060e0cffbc8dfb793aa2ef4617c89cb9e94)

0 Response to "When Using A Significance Test Calculator, What Is The “margin Of Error” Called?"

Post a Comment